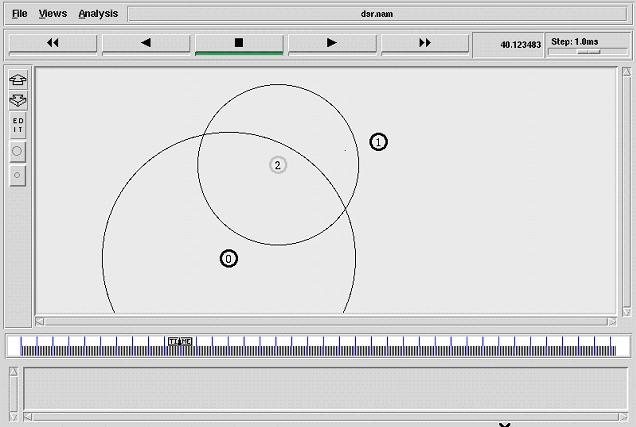

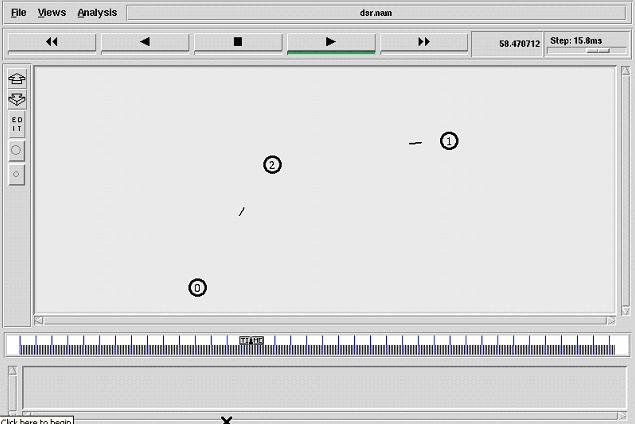

SaVi allows you to simulate satellite orbits and coverage, in two and three dimensions. SaVi project is particularly useful for simulating satellite constellations such as Teledesic and Iridium.

Requirements:

SaVi requires:

an ANSI C compiler, e.g. gcc from http://www.gnu.org/software/gcc/

tested and builds with egc variants: gcc 2.95, 3.2 and 3.3.1.

Tcl and Tk, from http://www.tcl.tk/

most recently tested with Tcl/Tk 8.4; use of Tk color picker and load/save file dialogs demands a minimum of Tcl/Tk 7.6/4.2.

Tcl/Tk 8.x gives increased performance, and is recommended.

If an existing installation of Tcl does not include header files, e.g. /usr/include/tcl.h, you may be able to add these by installing the tcl-devel package.

SaVi can optionally use:

SaVi can optionally use:

the zlib compression library, from http://www.gzip.org/zlib/

most recently tested with zlib 1.2.1. To build with zlib to compress dynamic texturemaps that are sent to Geomview, remove the

DNO_ZLIB flag from src/Makefile.

the X Window system. SaVi's fisheye display requires X, but can be disabled by passing the -no-X command-line flag to SaVi. X libraries are required to compile SaVi.

Geomview, discussed below. Geomview requires an X Window installation.

SaVi has been successfully compiled and run on the following machines and unix-like operating systems:

SaVi has been successfully compiled and run on the following machines and unix-like operating systems:

Intel x86 / Linux (Red Hat 6.x, 7.0, 7.2; Fedora Core 2; Mandrake 9.0)

Sun SPARC / Solaris (2.4 and later)

SGI / Irix5

Intel x86 / Cygwin (1.5.9-1 to .12-1. Use of SaVi's fisheye view currently requires an X display, so the fisheye view is automatically disabled under Cygwin for the non-X Insight Tcl that is supplied with Cygwin. Compiling Tcl/Tk for use of SaVi with Geomview together in an X display window is recommended. You may also need to edit tcl/Makefile to run a custom tclsh executable with older versions of Cygwin.)

Installation

For the remainder of this file, we shall refer to the directory originally containing this README file, the root of the SaVi tree, as $SAVI. That is, if you are a user and have unpacked SaVi in your home directory, then $SAVI would be the topmost SaVi directory ~user/saviX.Y.Z that contains this README file that you are reading.

1.) In $SAVI/src/Makefile_defs.ARCH, (ARCH is linux, sun, irix, or cygwin) you may need to edit some variables to suit your system. If your system is current with recent versions of Tcl and Tk installed, and everything is in its usual place, the generic defs file called "Makefile_defs." may work perfectly, and typing 'make' in SaVi's topmost directory may be

sufficient to compile the C files in src/ and index the Tcl files in tcl/.

If not, choose the Makefile_defs. file most suitable for your system and:

- edit the variables that give the locations of the Tcl/Tk libraries and header include files.

- edit the variables that point to the X11 libraries and include files.

- set the CC variable to an ANSI C compiler, e.g. gcc

2.) Return to the topmost SaVi directory $SAVI. Once in that directory, type e.g. 'make ARCH=linux' (or sun, or irix, or cygwin) in the topmost $SAVI directory. Typing just 'make' in the topmost directory will use the default Makefile_defs. file.

3.) You may also need to edit the locations of the Tcl and Tk libraries in $SAVI/savi at the TCL_LIBRARY and TK_LIBRARY lines when linking dynamically.

If running the savi script to launch SaVi generates Tcl or Tk errors, it is often because either the TCL_LIBRARY or TK_LIBRARY lines need to be corrected in that shell wrapper, or because make was not done using the top-level Makefile in the $SAVI directory. SaVi needs $SAVI/tcl/tclIndex to run. That tcl/tclIndex file must be generated by the tcl/Makefile that, like all other subdirectory Makefiles, is called by the top-level master Makefile in the same directory as this README file.

Using

As in the previous section, we refer to the directory containing this README file as $SAVI.

1.) To run SaVi standalone, without needing Geomview, in the $SAVI directory type:

./savi

Or from any other directory,

$SAVI/savi

To load in a satellites tcl scriptfile directly, type:

./savi filename

SaVi supports a number of command-line switches, many related to use with Geomview. To see these, type:

./savi -help

2.) To run SaVi as a module within Geomview, for 3D rendering, when in the $SAVI directory start up Geomview:

geomview

and then select "SaVi" from Geomview's scrollable list of external modules. Or invoke directly:

geomview -run savi [flags] < script filename >

Or from any directory where you can start Geomview, try

geomview -run savi [flags] < script filename >

You might invoke a saved one-line script, to pass parameters through to SaVi:

geomview -run savi [always-on flags] $*

3.) To make SaVi accessible to other users, you can copy the "savi" script in $SAVI to some directory in other users' search paths such as /usr/local/bin, so they needn't add SaVi's own directory to their own path. If you do, edit the "savi" script, inserting the full path name of $SAVI as indicated in the script itself:

# If you copy this script from the SaVi installation and run it elsewhere,

# then you should uncomment the following line:

# SAVI=/usr/local/savi

# and replace /usr/local/savi with the location of

# your SaVi installation.

You can also make SaVi accessible from Geomview's scrollable list of external modules. Assuming Geomview is installed in /usr/local/Geomview, say:

cd /usr/local/Geomview/modules/sgi

Create a file here called ".geomview-savi" containing e.g.:

(emodule-define "SaVi" "/usr/local/savi1.2.6/savi")

where the right-hand side is the absolute path name for the savi script.

What's New in This Release:

Installation

For the remainder of this file, we shall refer to the directory originally containing this README file, the root of the SaVi tree, as $SAVI. That is, if you are a user and have unpacked SaVi in your home directory, then $SAVI would be the topmost SaVi directory ~user/saviX.Y.Z that contains this README file that you are reading.

1.) In $SAVI/src/Makefile_defs.ARCH, (ARCH is linux, sun, irix, or cygwin) you may need to edit some variables to suit your system. If your system is current with recent versions of Tcl and Tk installed, and everything is in its usual place, the generic defs file called "Makefile_defs." may work perfectly, and typing 'make' in SaVi's topmost directory may be

sufficient to compile the C files in src/ and index the Tcl files in tcl/.

If not, choose the Makefile_defs. file most suitable for your system and:

- edit the variables that give the locations of the Tcl/Tk libraries and header include files.

- edit the variables that point to the X11 libraries and include files.

- set the CC variable to an ANSI C compiler, e.g. gcc

2.) Return to the topmost SaVi directory $SAVI. Once in that directory, type e.g. 'make ARCH=linux' (or sun, or irix, or cygwin) in the topmost $SAVI directory. Typing just 'make' in the topmost directory will use the default Makefile_defs. file.

3.) You may also need to edit the locations of the Tcl and Tk libraries in $SAVI/savi at the TCL_LIBRARY and TK_LIBRARY lines when linking dynamically.

If running the savi script to launch SaVi generates Tcl or Tk errors, it is often because either the TCL_LIBRARY or TK_LIBRARY lines need to be corrected in that shell wrapper, or because make was not done using the top-level Makefile in the $SAVI directory. SaVi needs $SAVI/tcl/tclIndex to run. That tcl/tclIndex file must be generated by the tcl/Makefile that, like all other subdirectory Makefiles, is called by the top-level master Makefile in the same directory as this README file.

Using

As in the previous section, we refer to the directory containing this README file as $SAVI.

1.) To run SaVi standalone, without needing Geomview, in the $SAVI directory type:

./savi

Or from any other directory,

$SAVI/savi

To load in a satellites tcl scriptfile directly, type:

./savi filename

SaVi supports a number of command-line switches, many related to use with Geomview. To see these, type:

./savi -help

2.) To run SaVi as a module within Geomview, for 3D rendering, when in the $SAVI directory start up Geomview:

geomview

and then select "SaVi" from Geomview's scrollable list of external modules. Or invoke directly:

geomview -run savi [flags] < script filename >

Or from any directory where you can start Geomview, try

geomview -run savi [flags] < script filename >

You might invoke a saved one-line script, to pass parameters through to SaVi:

geomview -run savi [always-on flags] $*

3.) To make SaVi accessible to other users, you can copy the "savi" script in $SAVI to some directory in other users' search paths such as /usr/local/bin, so they needn't add SaVi's own directory to their own path. If you do, edit the "savi" script, inserting the full path name of $SAVI as indicated in the script itself:

# If you copy this script from the SaVi installation and run it elsewhere,

# then you should uncomment the following line:

# SAVI=/usr/local/savi

# and replace /usr/local/savi with the location of

# your SaVi installation.

You can also make SaVi accessible from Geomview's scrollable list of external modules. Assuming Geomview is installed in /usr/local/Geomview, say:

cd /usr/local/Geomview/modules/sgi

Create a file here called ".geomview-savi" containing e.g.:

(emodule-define "SaVi" "/usr/local/savi1.2.6/savi")

where the right-hand side is the absolute path name for the savi script.

What's New in This Release:

makes SaVi easier to build on Linux systems, by adjusting the Linux makefile definitions. See the RELEASE-NOTE file.

tags the savi the tcl savi directory usr local run savi makefile defs the topmost readme file this readme edit the you may savi script topmost savi

the savi the tcl savi directory usr local run savi makefile defs the topmost readme file this readme edit the you may savi script topmost savi

| SaVi allows you to simulate satellite orbits and coverage, in two and three dimensions. SaVi is particularly useful for simulating satellite constellations such as Iridium and Globalstar. SaVi runs on Microsoft Windows (under Cygwin), on Macintosh OS X, Linux and Unix. To get started with SaVi, download SaVi 1.4.3 (from UK mirror). Read the SaVi user manual and learn about satellite constellations in a tutorial using SaVi. Then take a look at the optional but useful Geomview, which SaVi can use for 3D rendering. Further information on SaVi is available. SaVi is supported via the SaVi users mailing list. SaVi is developed at SourceForge. There is a SaVi developers mailing list. |

SaVi standalone | SaVi with Geomview |

the SaVi project is powered by SourceForge |